Each week I reverse engineer the products of leading tech companies. Get one annotated teardown every Friday.

Growth Dives: The complete guide to test methods

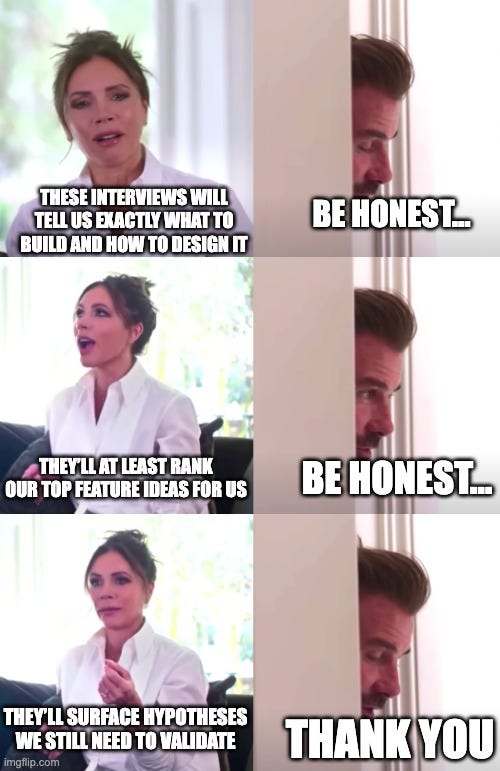

The complete guide to test methods18 methods by time-to-execute, budget and what they can (and can't) do for you Download PDF here or read online Time and time again, I see teams expecting too much from their research. They’ll run an A/B test to find out the ‘whys.’ They’ll run surveys when they need depth, or spend weeks on interviews when a quick prototype test would do.

Every test method has its strengths - but also situations where it’s simply a waste of time. This is a guide to picking the right method for the stage you’re at, the time you have, and the level of certainty you need. I looooved writing this, as I learned a few new methods (e.g., tree testing, card sorting) in the process! Most teams pick a method before they know what decision they need. So before we get into it, let’s take a step back. First, decide what you need to learn.Before picking any method, get clear on your research question: 🧠 “Do people have this problem?” → Discovery methods 💡 “Would people want this solution?” → Demand validation 🎨 “Does this design work?” → Usability testing 📊 “Which version performs better?” → Performance testing Most product decisions follow this rough sequence: discover problems → validate demand → test usability → optimise performance. But most people skip steps, loop back, or run methods in parallel. A common one is going straight to AB testing, running many with a high failure rate, then doing some discovery to get better at solving real problems. As a general rule, if you’re completely new to a problem space, start with discovery. If you’ve got something live but it’s not converting? Skip straight to live testing. Now, without further ado, the 18 methods:

Now let's run through each one-by-one, first up: discovery: 🔍 Discovery: “Do people have this problem?”Use when: you’re exploring a new problem space or trying to understand your users better 1. Support Ticket AnalysisThis is simply not done enough. Review your support tickets, chat logs, or reviews to identify recurring issues, confusion, or unmet expectations. Ideally, cluster and group them if you have the capability.

2. User InterviewsOne-on-one conversations to uncover problems, motivations, and the ‘why’ context you can’t get from analytics.

3. SurveysSending structured questions to lots of people to get a broad view. Quick, low-cost, and good for broad patterns, but less useful for deep qualitative insight

4. Contextual Inquiry or Field StudyWatching users in their natural environment, usually at work or in their daily routine. Essential for B2B tools or complex consumer behaviours.

💹 Demand Validation: “Would people want this?”Use when: You have a solution idea but haven’t built it yet 5. Concept TestingSits between discovery and demand testing really, but this is essentially where you show a mocked up concept and ask targeted questions. This is very low fidelity, often text descriptions, rough wireframes, or slide mockups.

6. Fake Door TestsAdd a button, link, or feature that doesn’t exist yet in the product to see if people click it. If they do click it, then you prompt something that says ‘thanks for your interest, we’re working on this feature. You’ll be the first to know!’.

7. Landing Page WaitlistsA landing page to test positioning, pricing, or a new startup idea — often paired with a signup form or waitlist. Similar to the fake door, but capturing the email is stronger in terms of intent than just a button click.

8. Wizard of Oz / White Glove TestingManually deliver the service behind the scenes. Great for technically complex features, complex algorithms, or service businesses.

9. Reaction CardsAsk users to pick from a predefined list of words (e.g. “trustworthy,” “confusing”) to describe how they felt about a design. Arguably, this could sit in discovery or usability depending on how it’s used.

🏗️ Usability Testing: “Does this design work?”Use when: You have designs but need to validate that they’re usable 10. Prototype TestingAsk users to complete tasks using a clickable prototype. Watch for hesitation, confusion or misclicks as these signal friction. Keep context minimal, and prompt lightly with questions like “What do you expect here?” or “What’s on your mind?” to find hidden assumptions.

11. 5-Second TestsShow someone a screen or image for 5 seconds, then ask what stood out or what they think it’s for. Useful for headlines, hero sections, or pricing pages.

12. Tree TestingYou give people a list of words (like a menu) and ask them to find something. There are no pictures or colours — just words. This helps you see if the labels and order make sense before you start designing the navigation.

13. First-Click TestingLike a prototype test, but you give users a task and track where they click first. First clicks often predict success, so this is a great tool for testing button placement, menu structure, or onboarding.

14. Card SortingUsers sort content/features into categories that make sense to them. Helps design intuitive navigation.

🏃♀Performance Testing: “Which version works better?”Use when: You have something live and want to optimise it 15. A/B TestingOne of the most well-known methods: you show two versions (A vs B — usually control vs variant) to two random groups of real users. After enough people have seen each version, you compare results to see which performed better. Best for testing one change at a time, like whether a new button or headline gets more clicks or signups.

16. ABC / Multivariate TestingSimilar to A/B but tests multiple changes at once (e.g. button + headline + layout).

17. Session RecordingsWatch real user sessions to spot friction points and where their mouse hovers the most.

18. Diary StudiesAsking people to log what they’re doing or thinking over time. For instance, notifications with an emotion check-in, or research studies of whether a product impacts longer-term habits.

Arguably, this could be a discovery test, but I’ve put it here as I think it’s more helpful for habit-forming products like therapy, for people to self-report on outcomes. How are you feeling at this point? Overwhelmed? Don’t be. There are lots of ways to learn — just don’t overthink itThis isn’t a checklist to work through; instead, think of it as a toolbox. Each method has a job. Some are quick and scrappy, others slow and robust. All of them can be misused if you’re unclear about what decision you’re trying to make. Even “tiny” methods, like reviewing support tickets or running a 5-second test, can be enough. The most important lesson here: don’t just go with your gut. At least not all the time. If you’re tight on time or budget, don’t aim for rigour — aim for direction.

Ask five users what confused them. Look for the last 10 users that churned and why. Run a lo-fi prototype test with a colleague. Got something you use that’s not here? Or something you think’s overrated? I’d love to hear it 👇 See you next week!!! Rosie 🚲 in the Alps 🚲 |

Growth Dives

Each week I reverse engineer the products of leading tech companies. Get one annotated teardown every Friday.